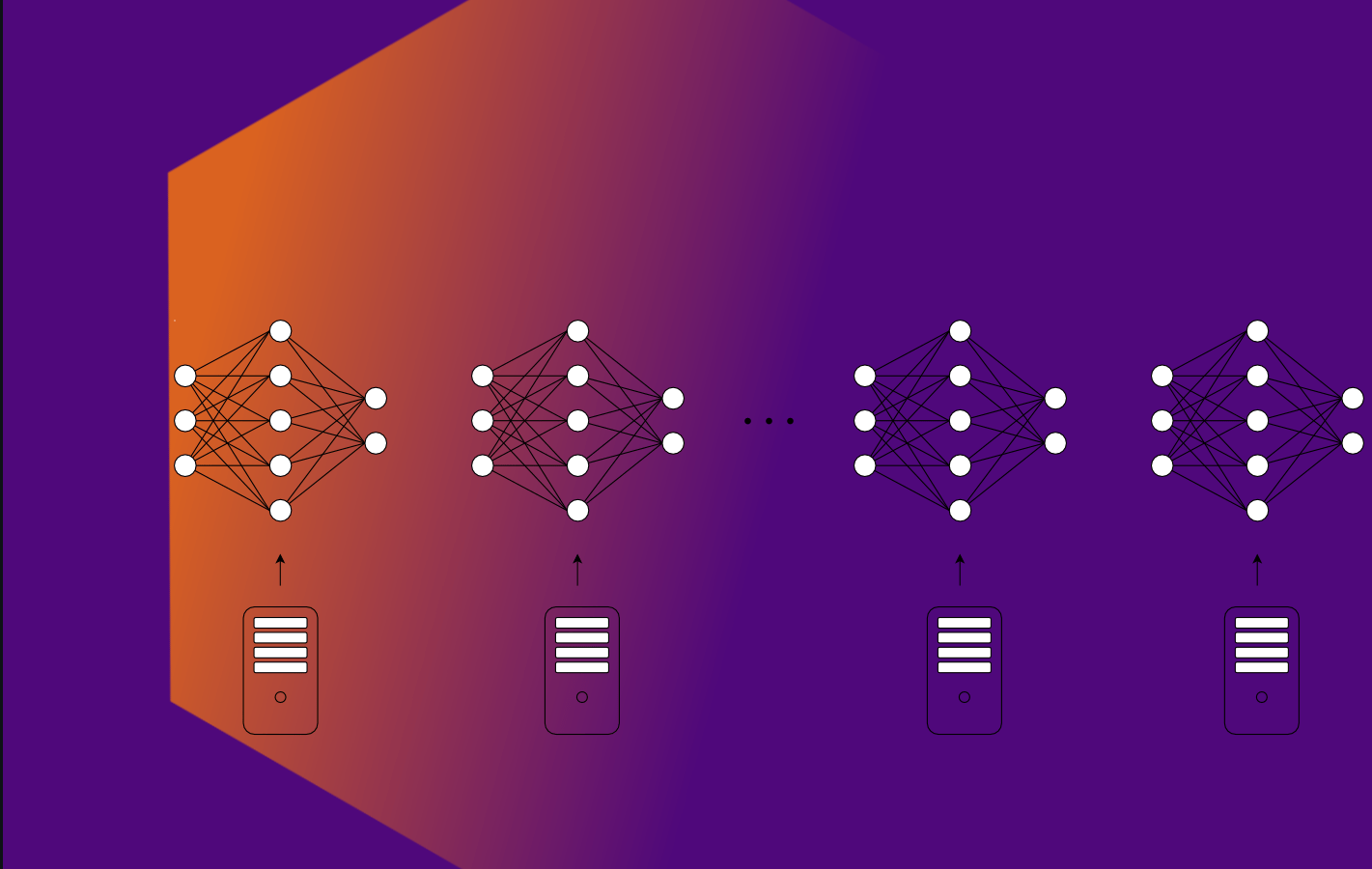

Are you tired of training a Neural Network for what seems like forever? You might want to consider distributed learning: one of the most popular and recent developments in distributed deep learning. In this literature review, we are going to delve into the understanding of this methodology by introducing the concept of distribution techniques. You will get an overview of different ways of making Stochastic Gradient Descent run in parallel across multiple machines and the issues and pitfalls that come with it. After recapping Stochastic Gradient Descent and Data Parallelism itself, Synchronous SGD and Asynchronous SGD are explained and compared.

Good to know:

To have a better understanding of this article, it would be ideal to be already familiar with the basics of machine learning and to know how artificial neural networks work.

Course:

Implementing ANNs with TensorFlow, WiSe 2022, Prof. Dr. Gordon Pipa

Spoiler Alert

The comparison between Synchronous SGD and Asynchronous SGD shows that the former is the safer choice, while the latter focuses on improving the use of resources.

Loading...

Loading...

Enjoyed reading the article?

Delve into a deeper conversation with the author in our podcast episode!